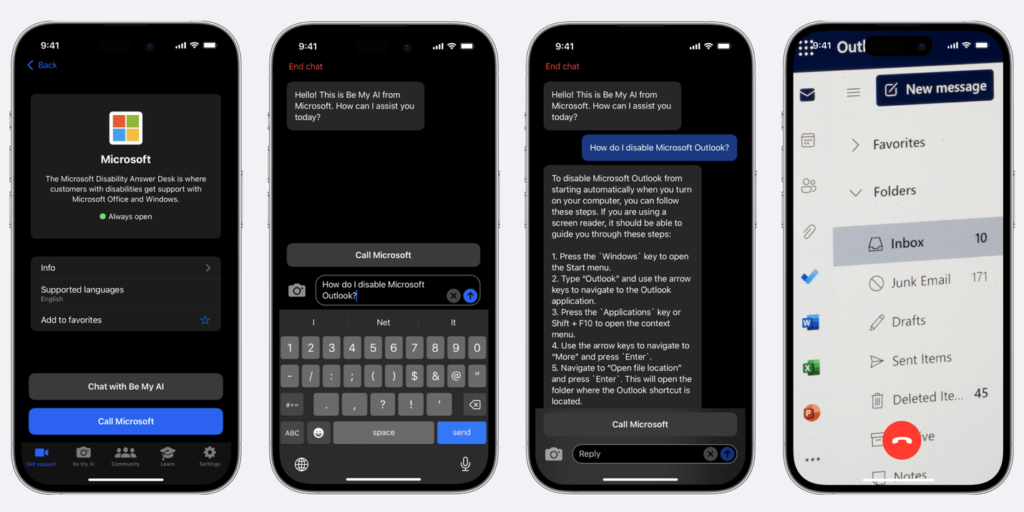

Since November 2023, Microsoft Disability Answer Desk callers who are blind or low vision can now use Be My AI™ to handle all types of customer service calls. Everything from Excel spreadsheet formulas to interpreting product instructions and diagrams, rebooting a laptop or installing and updating software, and much more.

This is the first use of AI accepting image inputs to augment traditional customer service for people with disabilities. The global deployment meets what Be My Eyes refers to as the 3S Success Criteria™:

- Success: a 90% successful resolution rate by Be My AI™ for Microsoft customers who try it. Put another way: only 10% of consumers using AI interactions are choosing to escalate to a human call center agent.

- Speed: Be My AI™ solves customer issues in one-third the time on average compared to Be My Eyes calls answered by a live Microsoft agent (4 minutes on average for Be My AI vs. 12 minutes on average for live agent support).

- Satisfaction: customer satisfaction ratings have improved with the implementation of Be My Eyes in Microsoft’s Disability Answer Desk with interactions averaging 4.85 out of five stars.

Github Copilot

In other AI Accessibility news, I’ve been reading about Github Copilot. I’m generally in favor of automation, to a degree. In the early days of the web, I was an old school HTML coder. Back when we still built page layouts using table tags (it was pretty terrible but we didn’t know better yet). I remember when the first WYSIWYG editors started coming out in the 90s. The first time I saw Dreamweaver I was simultaneously stoked and horrified. Sure, it made it easier for anyone to build out web pages. Unfortunately, it also generated so much unnecessary, nested, bloated HTML.

Fast forward to today. GitHub describe their Copilot service as an “AI pair programmer.” Examples on their website show it writing whole functions from a comment or a name. Unfortunately, many of Copilot’s suggestions include serious accessibility bugs. Developer Matthew Hallonbacka posted about his findings as they relate to:

- Alt Attributes

- Identifiable Links

- Focus States

- Spans that should be buttons

- Color contrast ratios

The danger is that developers will accept code suggestions, assuming they are valid. Be cautious when using Copilot. If you’re expecting a certain type of suggestion but receive one with extra attributes, take the time to look up those attributes. Don’t use the code until you understand what every part of it does.